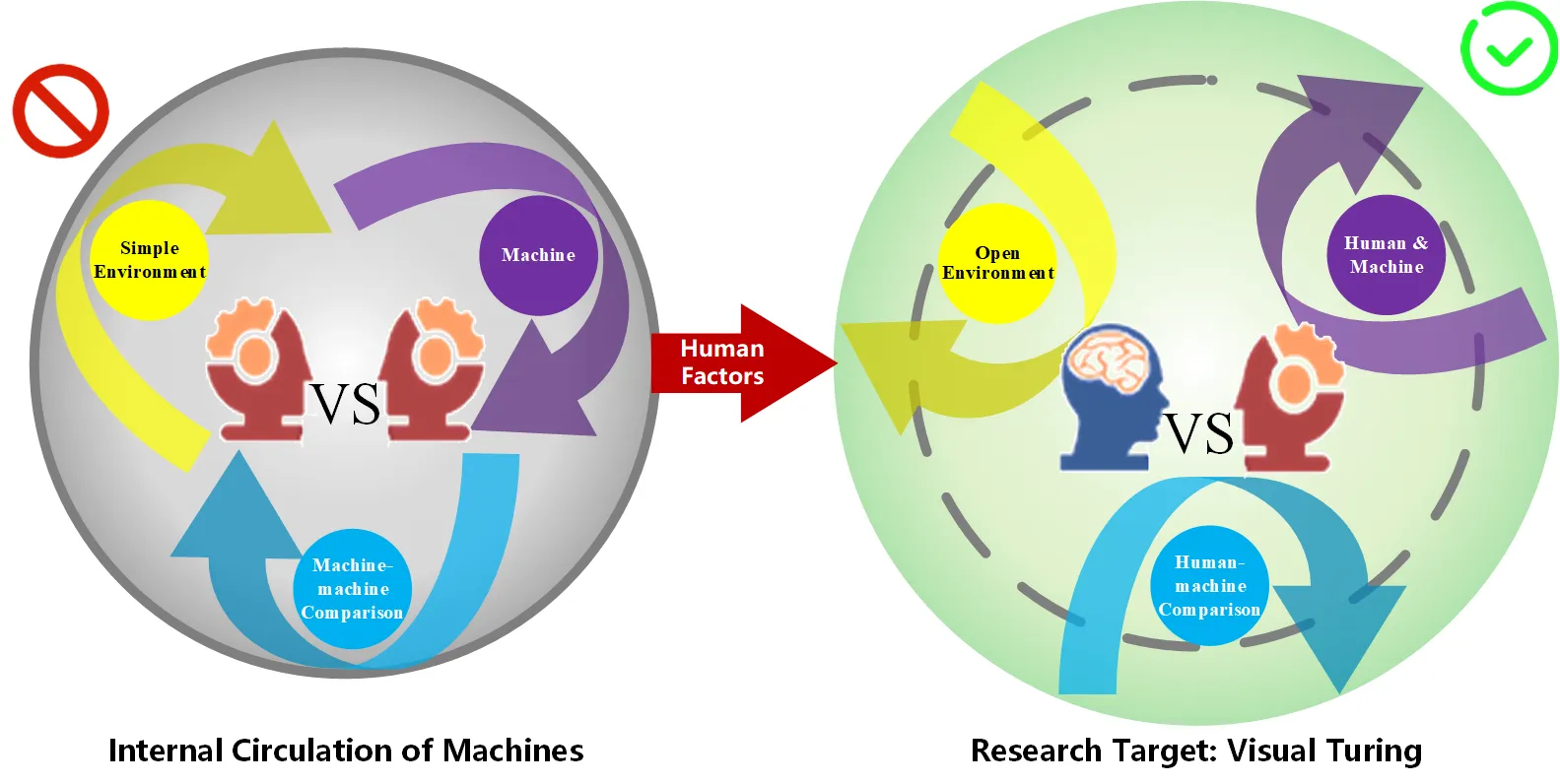

Our previous research has primarily been dedicated to evaluating and exploring machine vision intelligence. This research encompasses various aspects such as task modeling, environment construction, evaluation technique, and human-machine comparisons. We strongly hold the belief that the development of AI is inherently interconnected with human factors . Hence, drawing inspiration from the renowned Turing Test , we have focused our investigation on the concept of Visual Turing Test , aiming to integrate human elements into the evaluation of dynamic visual tasks. The ultimate goal of our previous work is to assess and analyze machine vision intelligence by benchmarking against human abilities. We believe that effective evaluation techniques are the foundation for helping us achieve trustworthy and secure artificial general intelligence. The following are several key aspects:

What are the abilities of humans? Designing more human-like tasks.

Focusing on utilizing Visual Object Tracking (VOT) as a representative task to explore dynamic visual abilities. VOT holds a pivotal role in computer vision; however, its original definition imposes excessive constraints that hinder alignment with human dynamic visual tracking abilities. To address this problem, we adopted a humanoid modeling perspective and expanded the original VOT definition. By eliminating the presumption of continuous motion, we introduced a more humanoid-oriented Global Instance Tracking (GIT) task. This expansion of the research objectives transformed VOT from a perceptual level , which involves locating targets in short video sequences through visual feature contrasts, to a cognitive level that addresses the continuous localization of targets in long videos without presuming continuous motion. Building upon this, we endeavored to incorporate semantic information into the GIT task and introduced the Multi-modal GIT (MGIT) task. The goal is to integrate a human-like understanding of long videos with hierarchically structured semantic labels, thereby further advancing the research objectives to include visual reasoning within complex spatio-temporal causal relationships.

What are the living environments of humans? Constructing more comprehensive and realistic datasets.

The environment in which humans reside is characterized by complexity and constant change. However, current research predominantly employs static and limited datasets as closed experimental environments. These toy examples fail to provide machines with authentic human-like visual intelligence. To address this limitation, we draw inspiration from film theory and propose a framework for decoupling video narrative content. In doing so, we have developed VideoCube, the largest-scale object tracking benchmark. Expanding on this work, I integrate diverse environments from the field of VOT to create SOTVerse, a dynamic and open task space comprising 12.56 million frames. Within this task space, researchers can efficiently construct different subspaces to train algorithms, thereby improving their visual generalization across various scenarios. Furthermore, our research also focuses on visual robustness . Leveraging a bio-inspired flapping-wing drone developed by our team, we establish the first flapping-wing drone-based benchmark named BioDrone to enhance visual robustness in challenging environments.

How significant is the disparity between human and machine dynamic vision abilities? Utilizing human abilities as a baseline to evaluate machine intelligence.

Computer scientists typically use large-scale datasets to evaluate machine models, while neuroscientists typically employ simple experimental environments to evaluate human subjects. This discrepancy makes it challenging to integrate human-machine evaluation into a unified framework for comparison and analysis. To address the aforementioned issues (How significant is the disparity between human and machine dynamic vision abilities?), we construct an experimental environment based on SOTVerse to enable a fair comparison between human and machine dynamic visual abilities. These sequences provide a thorough examination of the perceptual abilities, cognitive abilities, and robust tracking abilities of humans and machines. Based on this foundation, a human-machine dynamic visual capability evaluation framework is designed. Finally, a fine-grained experimental analysis is carried out from the perspectives of human-machine comparison and human-machine collaboration. The experimental results demonstrate that representative tracking algorithms have gradually narrowed the gap with human subjects. Furthermore, both humans and machines exhibit unique strengths in dynamic visual tasks, suggesting significant potential for human-machine collaboration.

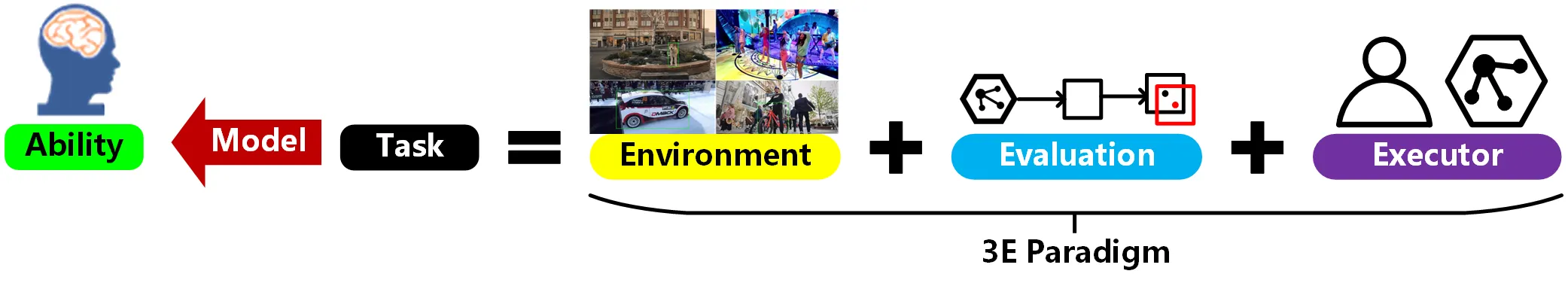

3E paradigm: Environment, Evaluation and Executor.

This human-centered evaluation concept is referred to as Visual Turing Test, and we have presented our thoughts and future prospects in this direction through a comprehensive review on intelligent evaluation techniques. These research contents can be summarized using the 3E paradigm. In order to enable machines to acquire human abilities , we need to construct a humanoid proxy task and execute it through interactions among the environment , evaluation , and executors . Ultimately, the executors’ performance reflects their level of ability, and their upper limit of ability is continuously improved through ongoing iterations. We hope these research can create a comprehensive system that lays a solid research foundation for improving the dynamic visual abilities of machines.